Knowledge is Power

We have recently discussed how pattern-based AI provides much more capacity and insight to the predictions it makes, especially when compared to artificial neural networks. But how do we use this additional information?

While we are still learning new ways data scientists can use our pattern-based AI, we do have some good ideas on how our information-rich outputs can help our customers do more with AI. Let’s look at a few concepts.

Build better machine learning models in less time

Data scientists have a tough job. Machine Learning models are complicated. Data scientists must tune these models repeatedly as they work to discover the model parameters that yield the best predictions.

But when a model behaves poorly, as they often do, how does a data scientist make it better? For neural networks, the “brightest bulb” nature of their output doesn’t provide much insight for the data scientist to work with.

This is like trying to troubleshoot engine problems by measuring only the exhaust. You can gain some insights, but you really need better, more usable diagnostics.

Pattern-based AI preserves the integrity of the input values all the way to its prediction. This gives data scientists access to information and provides them deeper insight. Think of our pattern-based AI as an X-ray of your ML model. It allows you to see the inner workings of the model.

Data Scientists can learn faster

When making predictions, our pattern-based AI system helps the data scientist understand:

- What input features are most informative?

- Which input features are non-informative?

- For an informative feature, what range of input values are most common for each class?

- Are there unique sub-classes within a class? What are the characteristics of each subclass?

- What combination of features does the prediction contain?

- What are the characteristics of a false positive/negative relative to a true positive/negative?

The ability to troubleshoot and improve AI models is perhaps one of the most overlooked benefits of explainable AI. And this troubleshooting capability isn’t just about the model.

Sometimes the input data itself has problems. Now you will have the ability to see that too. By providing insights that help reduce model development time, data scientists will become more productive. They will be able to reduce the enormous amount of resources consumed testing and retesting models. Explainable AI will make superstars out of data scientists!

Deeper understanding of the data

Once our explainable AI has helped the data scientist create a great model, we can take advantage of our pattern-based AI to provide a deeper level of understanding about the data itself.

Recall the structure of our output layer and the information contained within. Because the output of our pattern-based system is full of valuable information, we can use the informative output vectors in interesting ways.

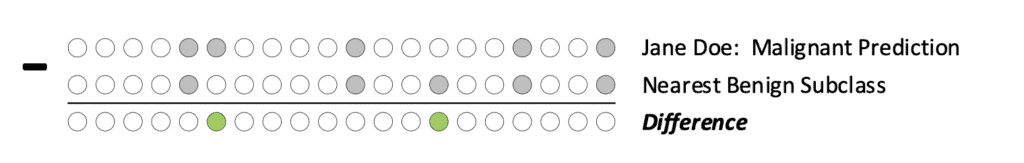

For example, we can take the difference between a patient prediction and the class signatures for certain diagnoses. Consider a malignant cancer prediction:

The image above shows how vector operations can be performed on the predictions made by our pattern-based AI system. If a medical record for Jane Doe predicts that she will develop cancer, wouldn’t it be interesting to see what the shortest path to a benign classification might be? Could Jane’s doctor use this information to prescribe more effective treatment??

Because each node in the output layer of our pattern-based system contains rich information, the differences between these vectors can answer interesting questions including:

- What is the shortest path to a better prediction?

- Is there any unintended bias in my predictions?

- What aspect of the data is responsible for different predictions?

Explainable AI and Data Anomaly Detection

Increased capacity and the simpler networks they allow can help in other ways. Remember that neural networks can only predict objects they have been trained on.

If the neural network is trained on 20 objects, its entire universe is comprised only of those 20 objects. If a foreign object is put into the system, the brightest bulb concept ensures that this never-before-seen object will be associated with one of the objects seen in the training set. After all, one of the bulbs will still be deemed the brightest even if it is an incorrect prediction. The results of this kind of ‘force-fit’ classification can be disastrous

A smarter AI system should know a lot about the objects it has been trained on. But it would also know when an unrecognized object (anomaly) has been introduced to the system.

In the real world, the unexpected happens. Sometimes, the unexpected is just a random variation and may be inconsequential. In other cases, it could be critical. An anomaly could represent a failure mode that has never occurred before, changing tactics by an adversary, or even a mutation of a virus that is being studied.

In each of these cases, the high-information contained in the output of our pattern-based AI system allows us to know instantaneously when an anomaly has occurred. Better yet, when we combine this anomaly detection capability with the intrinsic explainability of our system we not only know that an anomaly has occurred. We also know the characteristics of the anomaly!

Try that with a traditional AI system using brightest bulb classification techniques!

Explainable AI is all about the patterns

At Natural Intelligence we are believers in the future of pattern-based AI systems. We believe that mother nature did it right. Humans are amazingly intelligent because of our ability to see and understand patterns. The future of AI belongs to systems that can do the same.

Download Explainability White Paper

To learn more about Natural Intelligence and Explainable AI, please fill in the form below to download our white paper: